- Cloud Security Lab a Week (S.L.A.W)

- Posts

- Skills Solution: Investigate Your Own AWS Attack with Athena

Skills Solution: Investigate Your Own AWS Attack with Athena

Time for some answers.

CloudSLAW is, and always will be, free. But to help cover costs and keep the content up to date we have an optional Patreon. For $10 per month you get access to our support Discord, office hours, exclusive subscriber content (not labs — those are free — just extras) and more. Check it out!

Prerequisites

This is the solution for the challenge Skills Challenge: Investigate Your Own AWS Attack with Athena.

The Solution

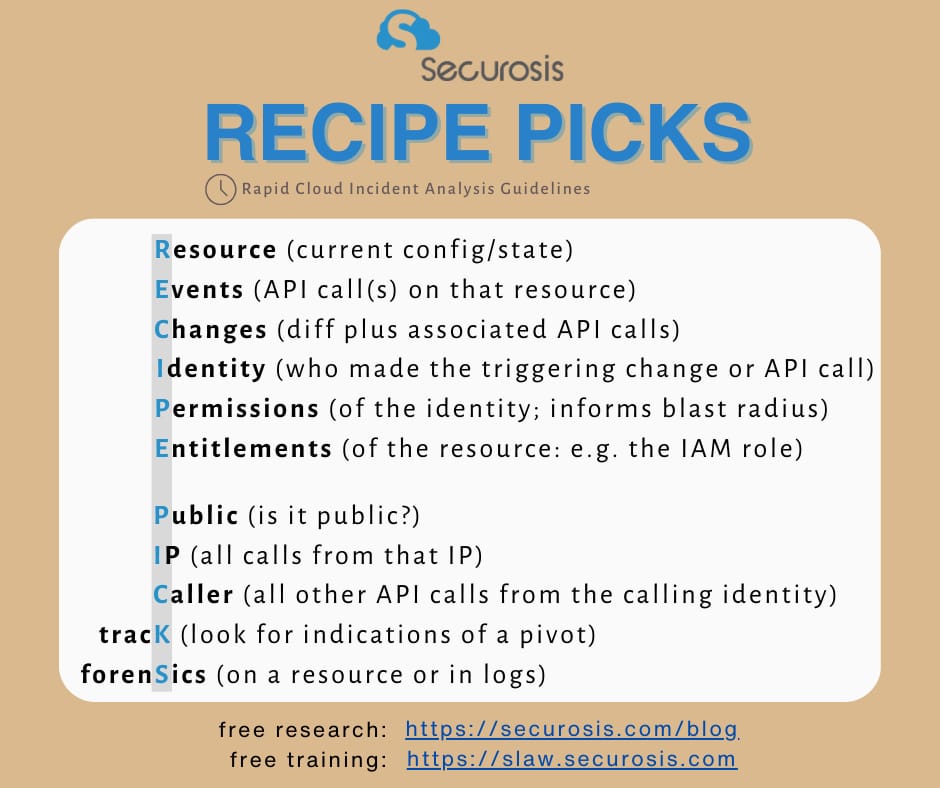

The prior lab simulated an attack in your account to generate logs we can use to start learning the basics of security queries for Athena and how to investigate incidents. This is all based on my RECIPE PICKS mnemonic, which I wrote up in this blog post.

In this lab I’ll walk you through my process and how I ran through the challenge. Remember, this is not a full incident investigation — we are still in the early learning phase, so there are parts I skipped!

Video Walkthrough

To start here’s the graphical mnemonic, and I’ll run through everything in order:

Maybe I should use an AI to make this look cooler!

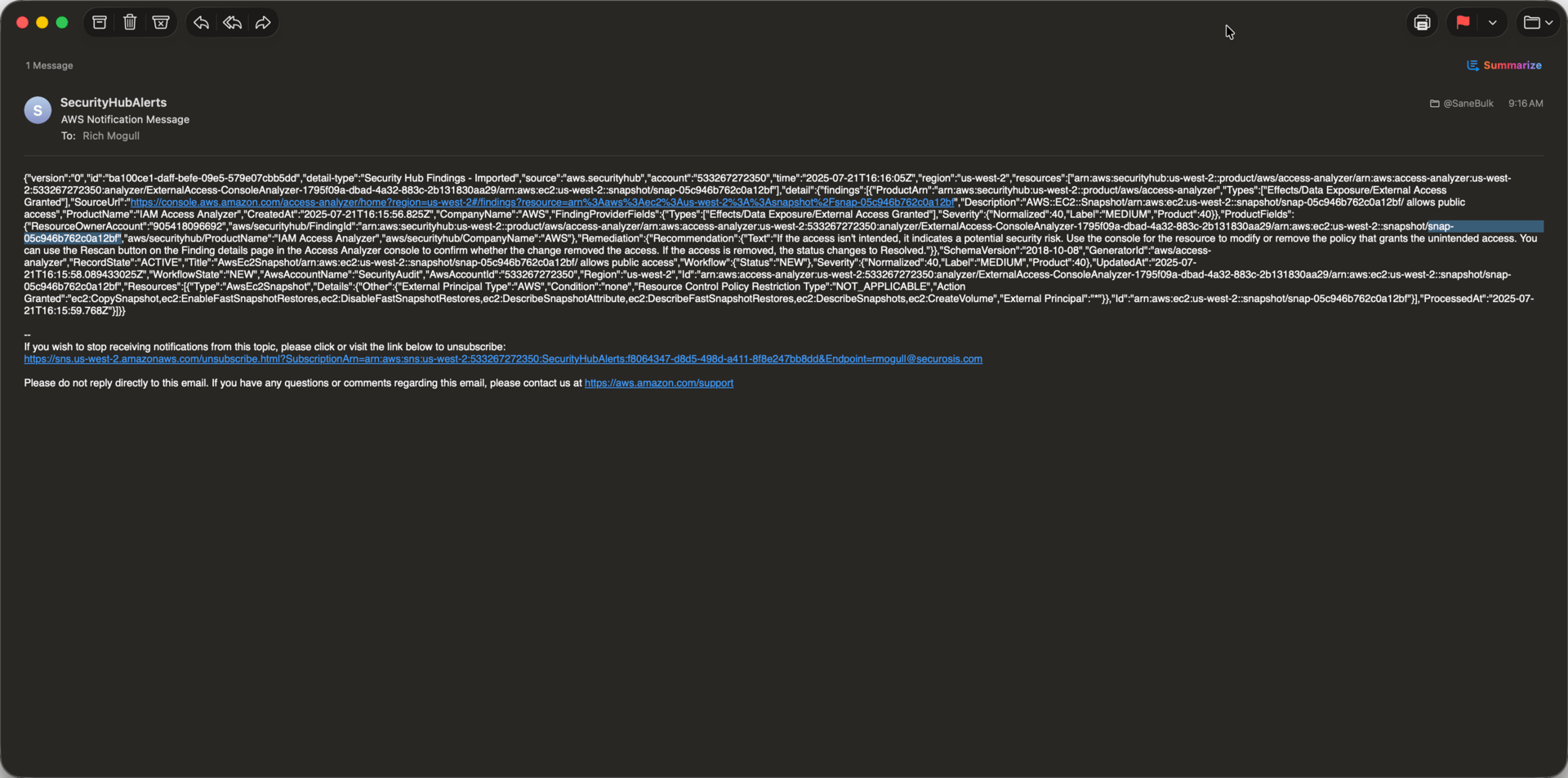

Now, how do I even know what I need to investigate? Well, it turns out I got this handy email from Access Analyzer via Security Hub (since we configured email alerts… many labs ago).

Guiding Principles

I use two phrases in any investigation that come from my work as a paramedic. Sick or Not Sick means as I go through the incident, I’m always trying to see whether there is a key indicator that things are bad. In security this means… does this indicate an attack or a breach? The second is Stop the Bleed… in other words, “Do I need to take action now so this doesn’t get worse?” Both of those are in my head whenever I’m investigating, and I ask myself these questions whenever I see something new.

Resource

That email tells me I have a public snapshot, and it provides the account ID and the snapshot ID. The first thing I did was copy that snapshot ID and put it into a text file for reference.

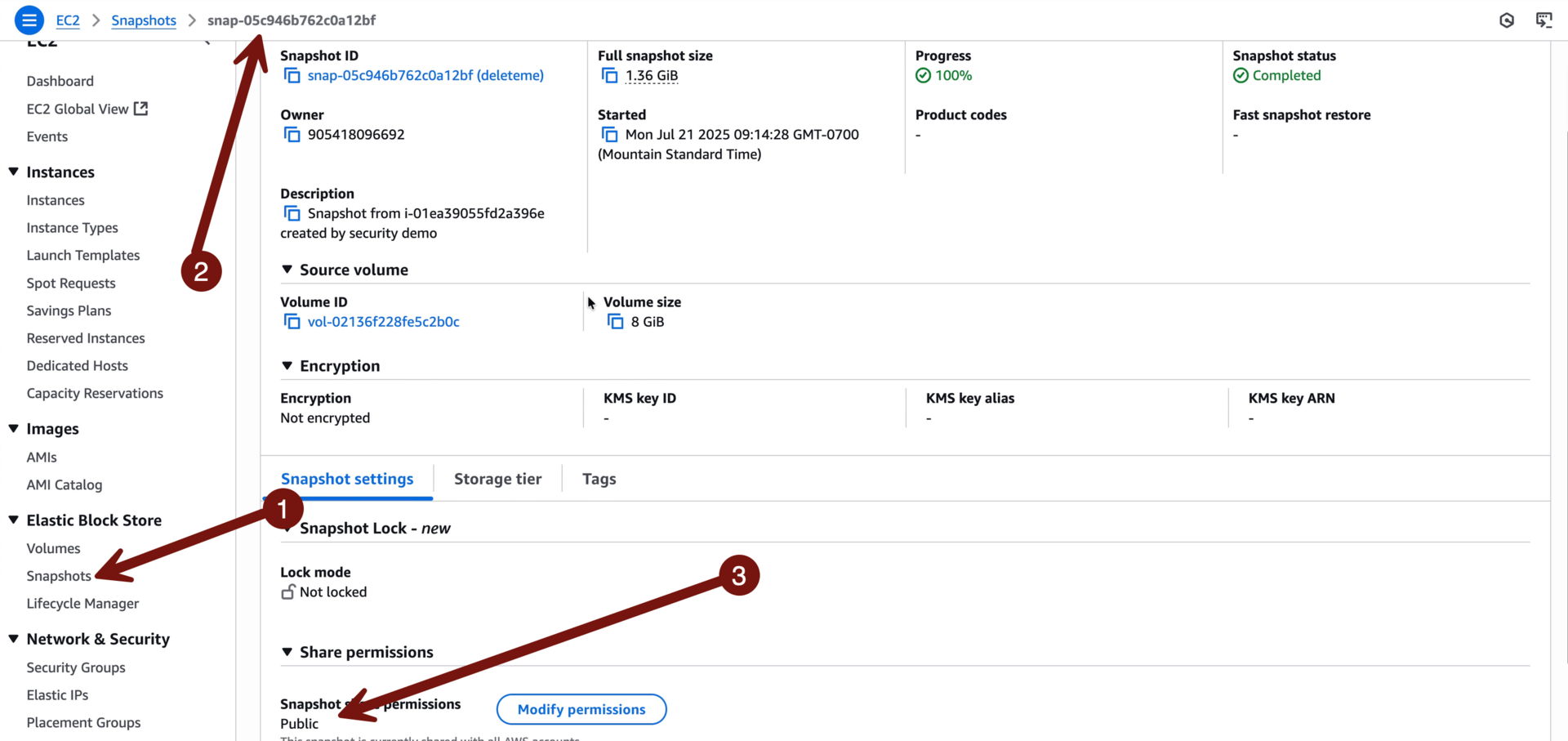

Then I logged into that account, which will always be via your sign in portal > TestAccount1 > AdministratorAccess, and went to view any snapshots via EC2 > Snapshots > deleteme. Remember, we did not delete the CloudFormation stack yet, so should still have the resources we need, but if you DID delete it before investigating you can run it again, or just rely on what you see in the logs.

I’ll call this one sick, so I want to stop the bleed. Why? Because it’s a public snapshot and I really shouldn’t have those if I wasn’t expecting them. If you want you can click Modify permissions and make the snapshot private.

In a real investigation I might also start looking at the instance the snapshot is based on, but we’ll skip that for this exercise.

Events

Go to sign-in portal > SecurityAudit > SecurityFullAdmin > Athena to run your queries.

This is the query we ran last week. I like to know the event, when it happened, and who did it. In our sample we don’t pull the requestParameters, but I often also grab those to see the full parameters of the API call.

SELECT

useridentity.arn,

eventname,

sourceipaddress,

eventtime,

resources

FROM cloudtrail_logs.organization_trail

WHERE requestparameters like '%snap-YOUR ID%' OR responseelements like '%snap-YOUR ID%'

ORDER BY eventtime

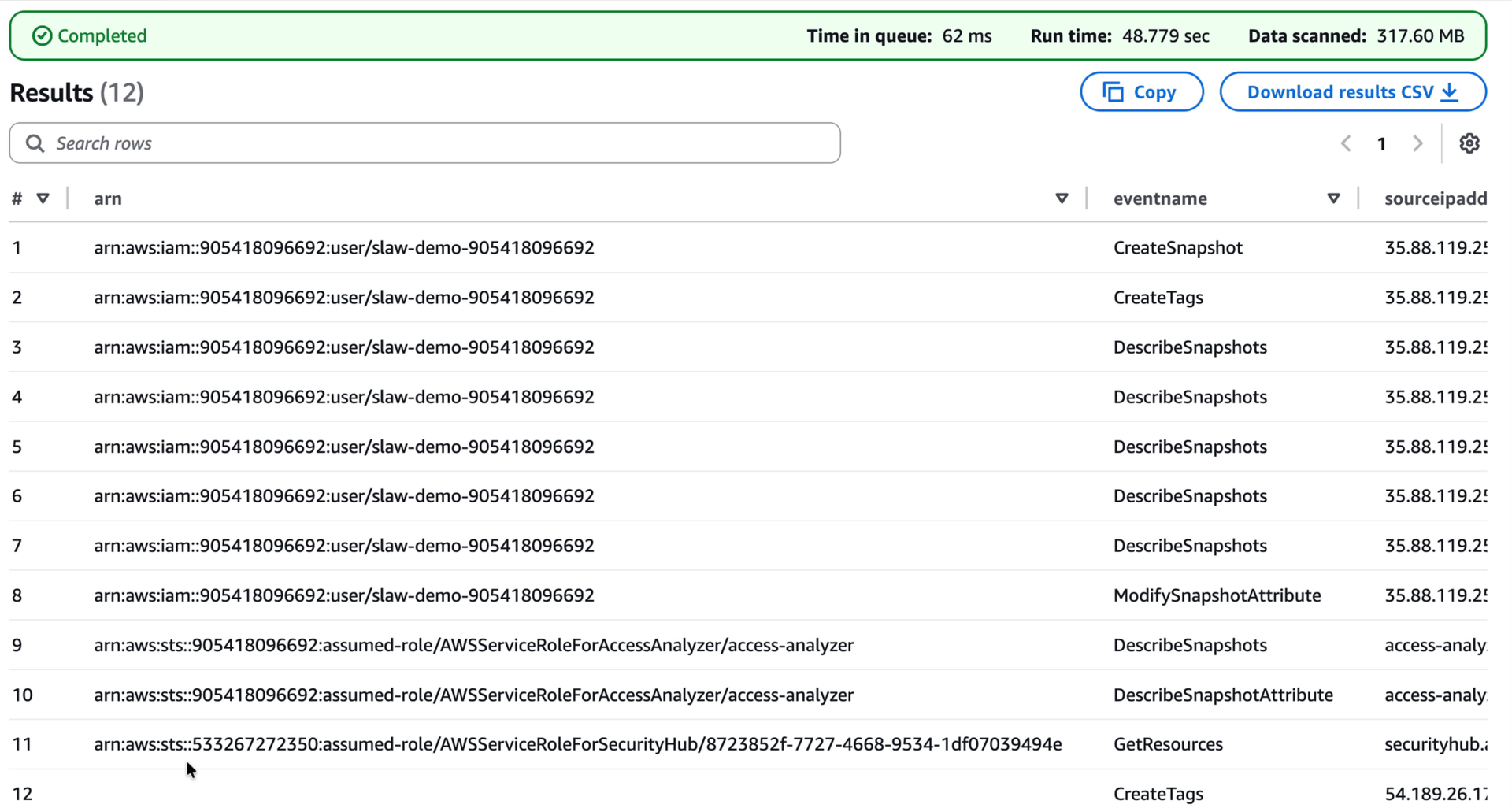

As a reminder, this shows every API call where the request or response includes that snapshot ID. I can see the original create call, the tag (with the deleteme name), a bunch of describe calls as the code was waiting for the snapshot to finish (you’ll see them a lot in logs), and then that ModifySnapshotAttribute, which I just happen to know is the call to make it public.

Nearly every call came from the slaw-demo IAM user, although you can also see Access Analyzer and Security Hub at work.

Changes

These are the before and after states. For the snapshot in this lab we see the full history (not public, then public). But this is a very simple demo, and in a larger incident we would need full change management tracking from AWS Config or a CSPM tool. That’s more than we have time for today.

Identity

Who made the API calls? We can see that it’s an IAM user (bad on you) with the name slaw-demo-<account ID>. Paste this into your notes, and we will come back later. There’s also an interesting CreateTags, with no associated identity, which we will ignore today (unless you want to look yourself), since it’s something internal to AWS.

At this point we know that IAM user created a snapshot of an instance and made it public, so we have a good sense of what’s going on here.

Permissions

This tells us the IAM blast radius of that IAM user. What permissions does it have? How much damage can it do?

To make life easy, I just logged into TestAccount1 > IAM > Users, clicked that user, and looked at the permissions. Instead of a truncated screenshot, here’s the full permissions policy:

{

"Version": "2012-10-17",

"Statement": [

{

"Action": [

"iam:GetUser",

"sts:GetCallerIdentity"

],

"Resource": "*",

"Effect": "Allow"

},

{

"Action": [

"ec2:DescribeInstances",

"ec2:DescribeVolumes",

"ec2:DescribeSnapshots",

"ec2:DescribeSnapshotAttribute"

],

"Resource": "*",

"Effect": "Allow"

},

{

"Action": [

"ec2:CreateSnapshot",

"ec2:CreateTags"

],

"Resource": "*",

"Effect": "Allow"

},

{

"Action": [

"ec2:ModifySnapshotAttribute"

],

"Resource": "*",

"Effect": "Allow"

}

]

}I’d call this patient sick. Why? Because that IAM user can describe and snapshot any of my instances, and change the attributes to make them public!

In a real incident, I’d probably add a DenyAll policy to stop the bleed, but not today.

Entitlements

Okay, sometimes you just need to make a mnemonic work! I wrote this before any nifty AI could help out. In this case we are shifting to the permissions of the resource. Does a snapshot ever have permissions? Nope. But the instance it’s based on? Maybe

You can go into EC2 > instances and poke around and see whether your instance has an IAM attached role (it doesn’t). It might still have embedded credentials for something, but save some time and don’t bother — I just gave you the answer. Too many instances to figure out which one is affected? You can pull the ID of the storage volume the snapshot was based on in the RequestParameters, then look at that volume to see which instance is attached.

Like I said, today is just the basics.

Public

Been there, done that, and the answer was in the alert that said “this thing is public.”

IP

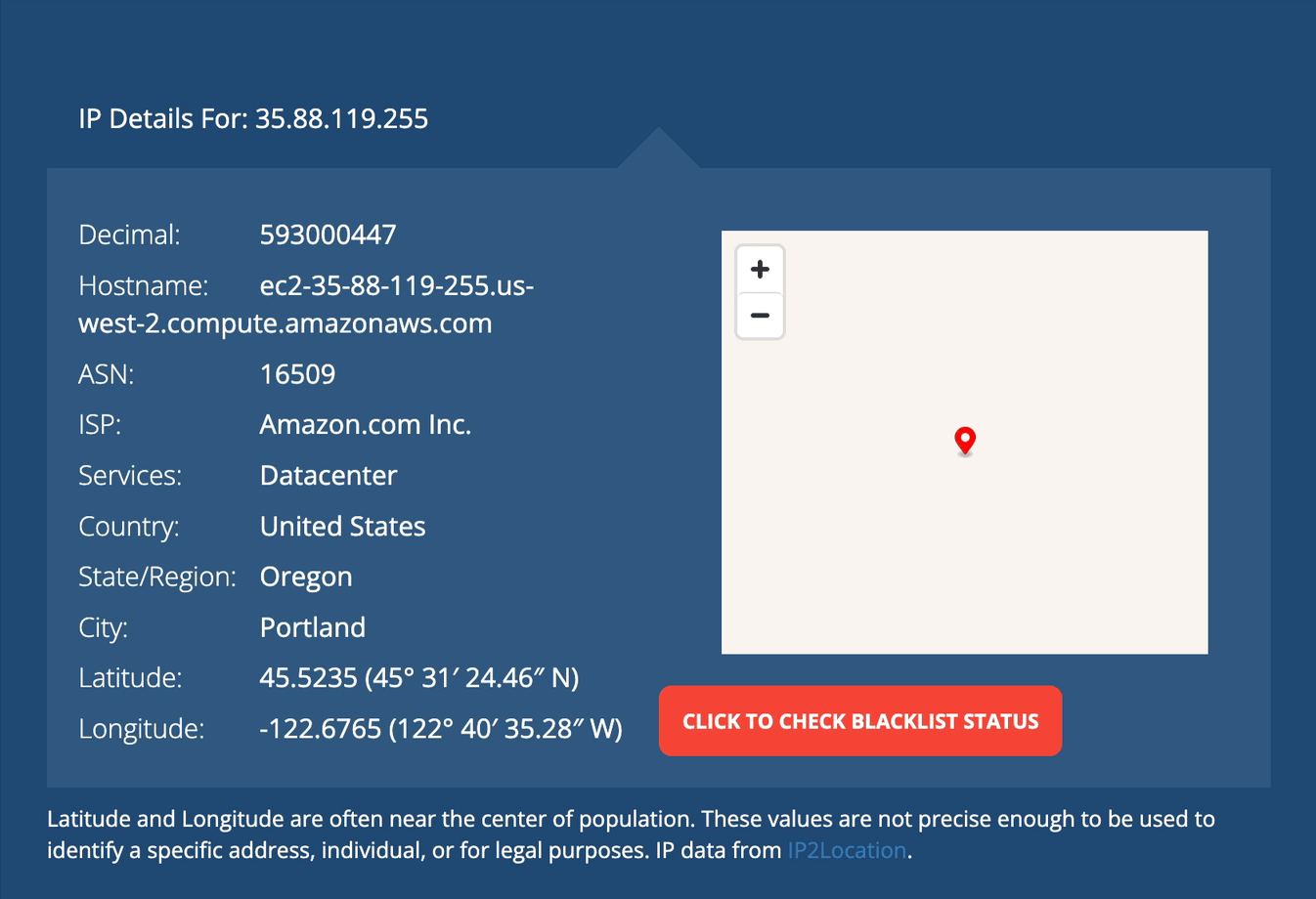

For this I did two things. First, I pulled the IP from the query results and ran it through an IP checker (whatismyip.com).

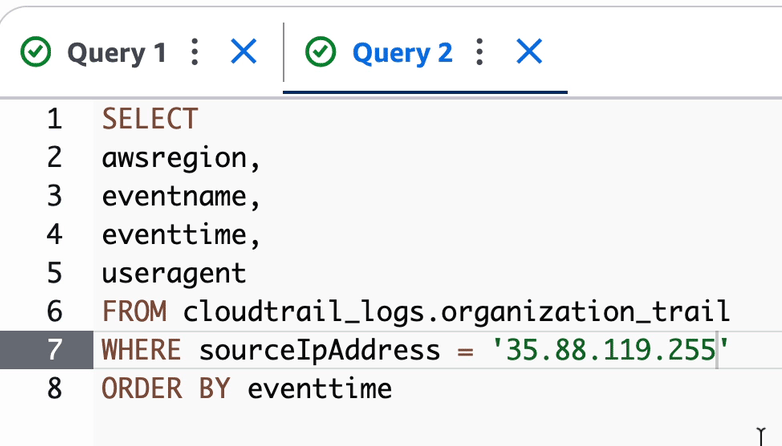

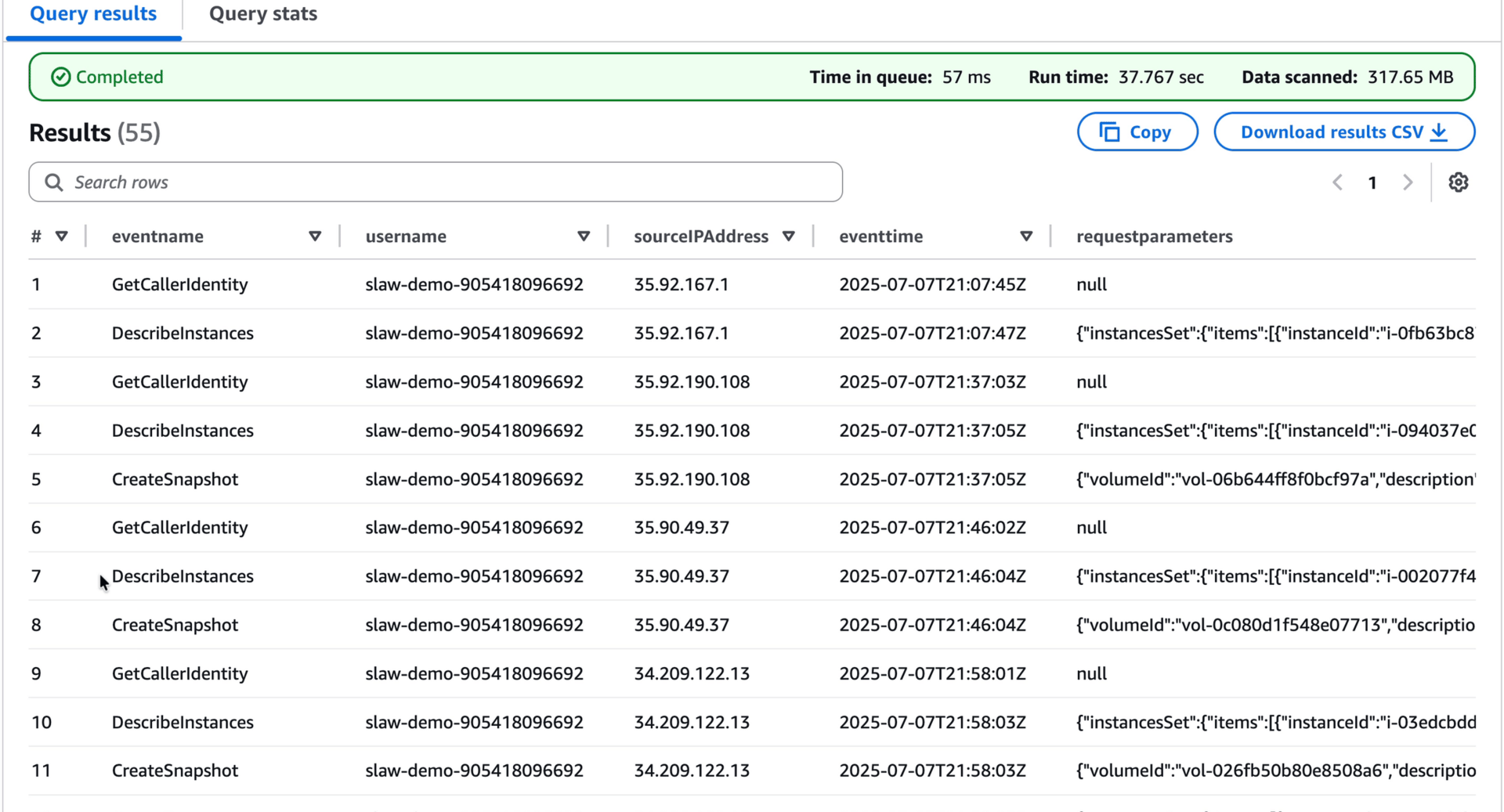

Well, it came from within AWS. And to save you time, AWS uses a lot of instances to run things (including lambda functions, which is what we did today) so that… is not overly helpful. However, this query will tell us all the API calls to our account from that IP, and might give us a better sense of what’s going on:

Open another query window and use the provided JSON

SELECT

awsregion,

eventname,

eventtime,

useragent

FROM cloudtrail_logs.organization_trail

WHERE sourceIpAddress = 'IPADDRESS'

ORDER BY eventtime

Well that’s interesting, the useragent string shows this originated from a lambda function running python (that’s thanks to the Boto part of the string, which is the name of the python SDK for AWS).

I also see GetCallerIdentity, which can be an indicator of attack, because it’s the API call you make when you… don’t know who you are. If you are an attacker and you get an access key and secret key, you often start with GetCallerIdentity so you can learn what account you just got access to — this is a noisy method, there are other quieter ones out there.

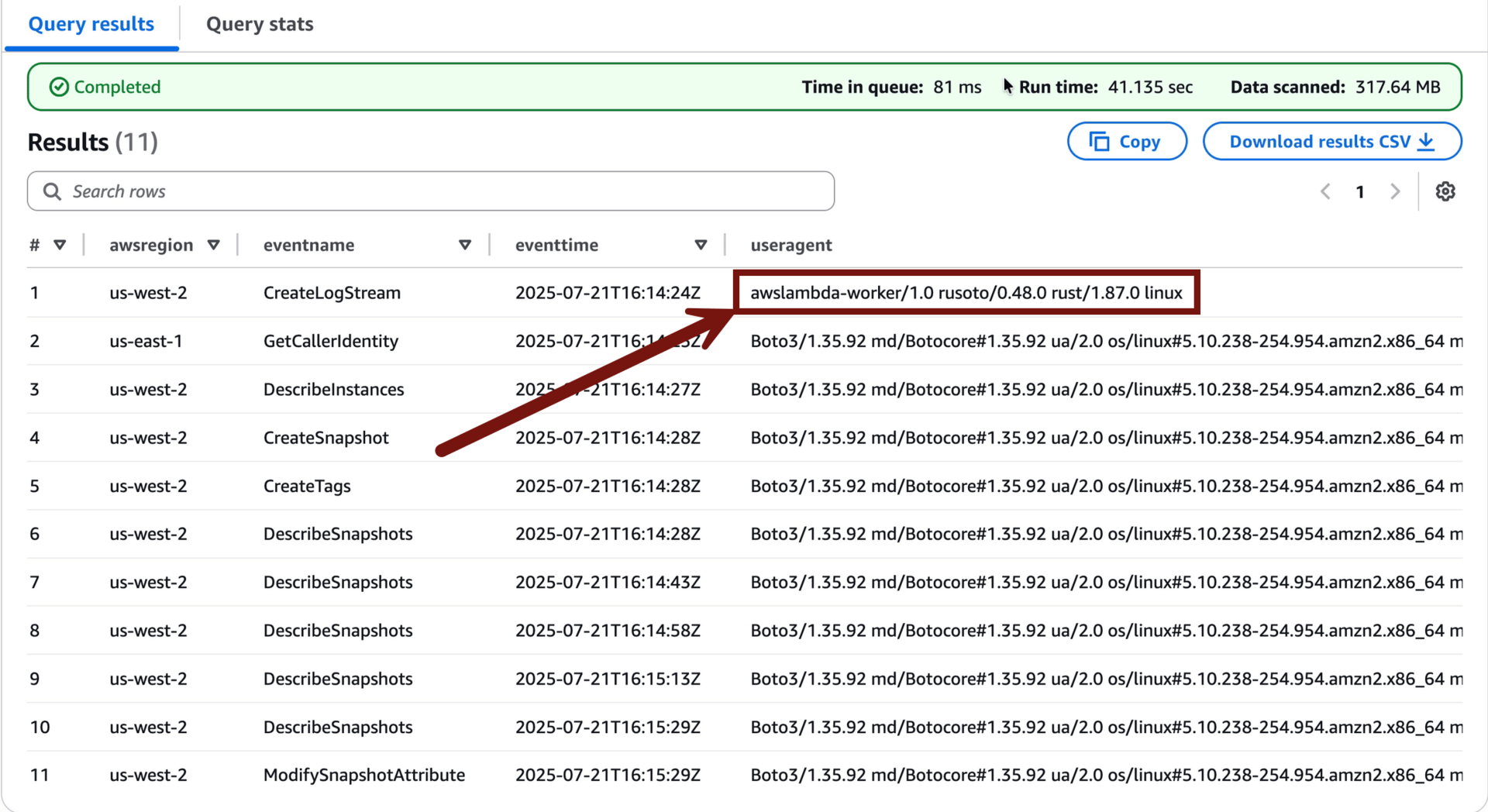

Caller

Let’s look at all the API calls from that IAM user. Open another query window and use this JSON:

SELECT eventname, useridentity.username, sourceIPAddress, eventtime, requestparameters

from cloudtrail_logs.organization_trail

where useridentity.username = 'slaw-demo-YOURID'

order by eventtime asc;

I have more results than you, since I ran this multiple times, and we are searching on a name (if I looked for the access key it would only show unique results, since those aren’t reused… mostly). I also added the RequestParameters to the results, just to show how you can add and remove the data you are pulling based on what you are doing at the time.

As I look at this, I don’t see anything I didn’t already know from pulling the other logs. The IAM user runs GetCallerIdentity, then describes instances, takes a snapshot of one, then makes it public.

Track

This is where we look for indications of a pivot. There isn’t really a query for this, so we look for indicators like multiple IAM users or roles being used from the same weird IP address, signs of role chaining and similar. It’s more than we can really cover in this basic scenario, sorry!

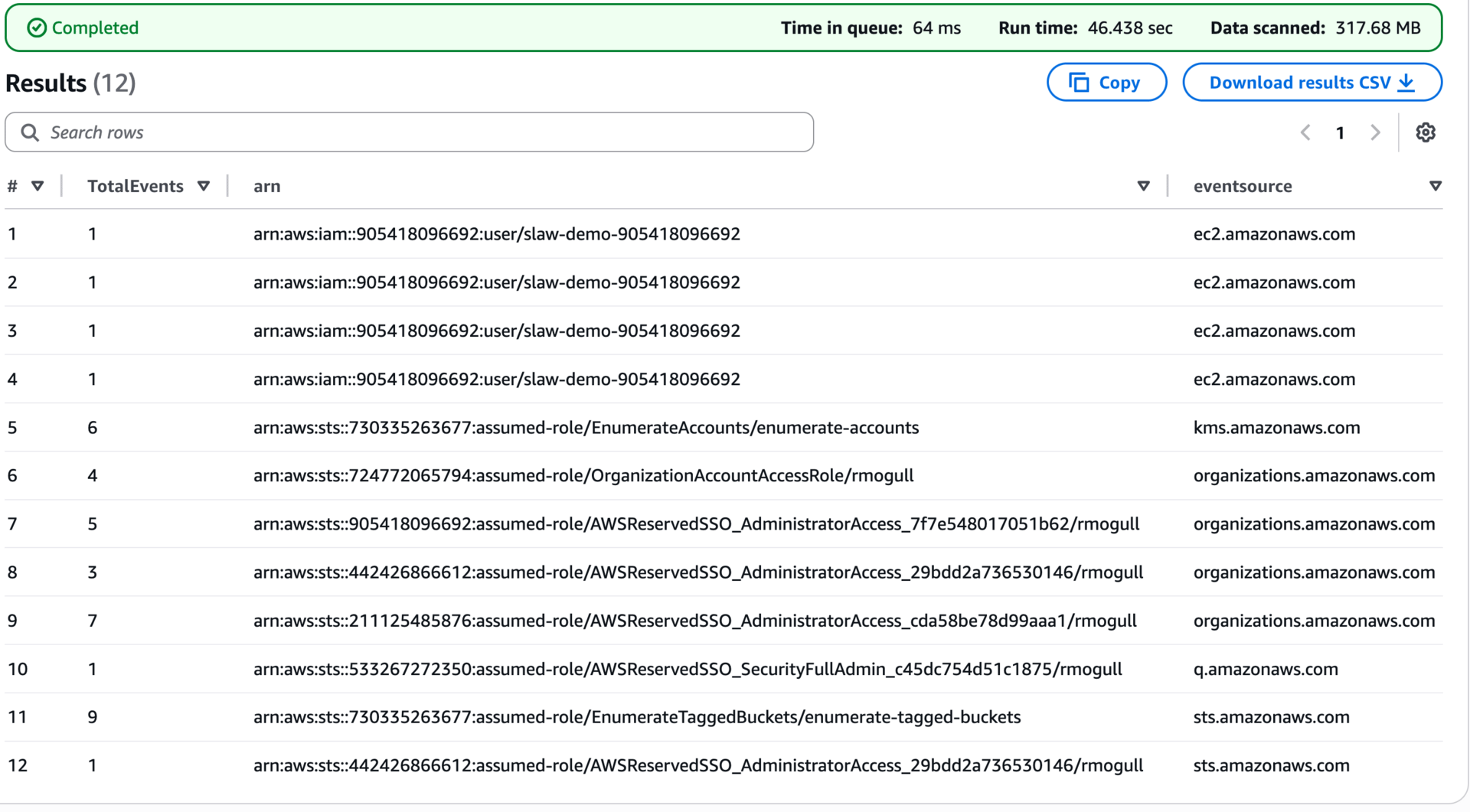

But hey, I don’t want to leave you with nothing! Here’s a query to identify any API calls which were denied or had errors. It can be super noisy and not always useful, but combined with the other information we gathered, it might help give you a sense of what security boundaries the attacker was trying to get past:

SELECT count (*) as TotalEvents, useridentity.arn, eventsource, eventname, errorCode, errorMessage

FROM cloudtrail_logs.organization_trail

WHERE (errorcode like '%Denied%' or errorcode like '%Unauthorized%')

GROUP by eventsource, eventname, errorCode, errorMessage, useridentity.arn

ORDER by eventsource, eventname

Nothing really stood out to me when I went through these. Normally I would time-bound around the attack, but I was lazy and pulled them all from the last 3 months.

Forensics

This is… everything else. Do I need to see everything running on the instance or what data was stored on it? Do I have other kinds of logs like database or VPC flow logs to review?

Incident Summary

Our objective today was to gain more experience with Athena security queries through a very basic incident. Based on our queries and looking around, we now know:

A lambda function from another account:

Used GetCallerIdentity to figure out what account it belonged to.

Described our instances.

Took a snapshot of an instance (technically, of the storage volume).

Tagged the snapshot deleteme.

Used ModifySnapshotAttribute to make the snapshot public.

The IAM user had permissions which allowed it to snapshot any instance and make it public.

The lambda function used the python SDK.

The instance did not have any permissions (roles) assigned to it.

I’d guess this was an exposed credentials situation, largely due to the GetCallerIdentity API call. Also, because I coded the attack and I sent your credentials to my account in the first place.

I hope this was useful. We’ll come back to this exact scenario later, and go into much greater depth to fill in the gaps I glossed over. This set of queries is nearly always where I start, and if you are using a commercial SIEM you can easily modify them for the appropriate query language for your platform.

-Rich

Reply