- Cloud Security Lab a Week (S.L.A.W)

- Posts

- Role Chaining and Testing Your Code

Role Chaining and Testing Your Code

We set up for our role chain; now it's time to implement it in code, and learn how to test Lambda functions.

CloudSLAW is, and always will be, free. But to help cover costs and keep the content up to date we have an optional Patreon. For $10 per month you get access to our support Discord, office hours, exclusive subscriber content (not labs — those are free — just extras) and more. Check it out!

Prerequisites

This is part 7 of the Advanced Cloud Security Problem Solving series, which I’ve been abbreviating to Epic Automation. If you haven’t completed parts 1-6 yet, jump back to the first post in the series and complete all prior posts before trying this one.

The Lesson

This lesson is all about the hands-on. We’ve covered the principles, so now it’s time to start implementing. We will focus on two mechanics:

How to implement a role chain in code

How to test Lambda function in the console and work through error messages.

Let’s quickly cover each of these, and then get into the meat of this lab.

Role Chaining in Code

As a reminder, a role chain is when you use one role to assume another role. In our last lab we learned how to define permissions to secure a role chain. With that out of the way, everything is in place to assume a role. This isn’t the first time you role chained: in previous labs when we logged into IAM Identity Center and then used the little Switch Role link in the upper-right corner of the console, that was technically chaining from our Identity Center role into OrganizationAccountAccessRole in the target account. But stuff in the console is a bit magical and abstracted — now we’ll see the guts.

The trick in code is that you are creating a session. You can code in multiple sessions, and then pick which to use when making an API call. Heck, you could set up 8 different sessions, go all Doc Oc, and do different things in different accounts simultaneously.

The TL;DR on how API calls in AWS actually work

I use the term “session” a lot, and sometimes in the console and code it looks like there is some kind of secure pipe we make all our API calls through… but that is not how it works!!!

When AWS was created, SSL hardware that worked on the scale of even then-baby-AWS was very expensive and not sufficiently viable. To keep AWS API calls secure and private, Amazon just built all the encryption into the request itself. When you make an API call into AWS it uses something called http request signing using Signature 4.

The API call itself is a self-contained encrypted blob using your access key and secret access key. Think more “secure envelopes with encrypted letters going through the mail”, and less “encrypted phone calls”.

We always need a base identity. In our case, that’s the default lambda execution role. If we assign a role to a lambda function, the lambda service just assumes it for us when our function runs. Any API call that doesn’t specify another session uses the default lambda execution role.

After that, we need to manually assume roles in our code. Assuming a role retrieves temporary credentials used to make API calls.

Here’s the function in our code to assume a role in another account. Since we use this to assume roles in multiple accounts, but the role name is always the same, this little lambda function just needs the Account ID of the account to jump into; it already knows the role name. Boto3 is the name of the python library for AWS — don’t let that confuse you!

It’s doing the following:

Get an account ID to use when running,

Create the full “role_arn”: the ARN of the role we will assume, including the account ID and path/name.

Start up a connection (boto3.client) to the security token service (which we use to assume roles).

Assume the target role (role_arn) and sets a session name as S3SecurityAnalysis. You can code in whatever session name you want, which shows up in the CloudTrail logs.

sts.assumerole returns some stuff; we pull out the temporary credentials (Access Key, Secret Access Key, and Session Token).

The function returns those credentials.

That’s the key point: assuming a role retrieves temporary credentials, which we use to make API calls; we still have credentials, they are just ephemeral.

Here’s where it gets a bit tricky. This process gives us temporary credentials, but we need to create the session. What’s that? Basically it’s a container (object) in the code which holds the credentials, region, etc. for the actual API calls. This function doesn’t reconnect with AWS — it just says, “Create a session object that stores the credentials which I can use for API calls.” Remember that a “session” on the AWS side is just “a set of credentials which expire”, not some persistent pipe.

The “session” object in boto3 is just the container, so you don’t need to specify the keys and configuration every time you make an actual API call.

# Assume role in the target account

try:

credentials = assume_role(account_id)

session = boto3.Session(**credentials)

except Exception as e:

logger.error(f"Failed to create cross-account session: {str(e)}")

return {

'statusCode': 500,

'body': json.dumps(f'Failed to create cross-account session: {str(e)}')

}Now we have our credentials in a little object we can use for API calls with lines like s3_client = session.client('s3') — which sets us up to talk to the S3 service. The boto3 Software Development Kit (SDK) converts a command like s3_client.get_bucket_policy(Bucket=bucket_name) into an encrypted request for the bucket policy, packages it up, and sends it to the S3 service.

Phew. This is one of those situations where I don’t want to overcomplicate or oversimplify. It’s a very elegant system — especially once you go deep into the weeds to see how AWS processes all these events. There is crazy stuff like service, region, and even daily keys under the covers. Let’s just say these requests aren’t just decrypted and shared on the back end of AWS… everything is really locked down at every level.

Okay, one last attempt to keep it simple:

You start with a base identity — otherwise you can’t even talk to the Security Token Service (STS) to try assuming a role.

You make the assumerole API call, which returns a set of temporary credentials you can use for a session (until they expire).

The boto3.session API call creates a reusable object in code which holds those credentials and makes it easier to use them. (Among other things, when they expire we only need to update the session object once, instead of in all the clients).

session.client(service) sets us up to make an API call to a service. The API call itself gets wrapped up as a secure letter, using the keys retrieved with assumerole.

In our code we only have one session, so we call it “session”. I’ve written code where I need to juggle multiple sessions to multiple accounts, and I just name the session so I can track which one I’m using. E.g. “session_logs”, “session_database”, “session_breakthings”.

Testing Lambda Code

Wow, this is already longer than I planned.

Okay… to test a lambda there are the “I’m a real developer” options, and the “I’m a hacker/security person who just slaps stuff together” options.

We’ll go with the latter. Duh.

If you are doing full-time and big project dev work, you probably want to use a local testing framework like LocalStack or AWS SAM CLI. For our purposes we will run tests in the console with a built-in capability.

Last lab we made changes which triggered our function, and then we looked in the logs to see what broke. That is inefficient, and testing in the console with test events is a much better option. So, yeah, we’ll do that.

Key Lesson Points

When you assume a role, that asks the Security Token Service to give you a set of temporary credentials which work for a set time — we call that a session.

In code, you need to specify those credentials for those API calls.

To make this easier, the SDKs for different programming languages allow you to create an object called a “session” which holds those credentials, so you don’t need to specify them every time.

The Python library is called Boto3. No, I don’t know why. Yes, I could ask one of my AIs to figure it out. No, I’m not going to.

You can test lambda functions offline using special tools, but you can also load a test event into the console and use the feature there.

The Lab

We will:

Generate an event in our Production1 account, which we can use as a test event for our lambda function.

Create the test event in the console.

Update our function with the new code Rich wrote, which includes the role chain and some API calls to gather information about the S3 bucket. Rich promises he wrote it all himself and didn’t use AI. (Rich lies).

Run the test, and then fix 2 problems with the code.

Video Walkthrough

Step-by-Step

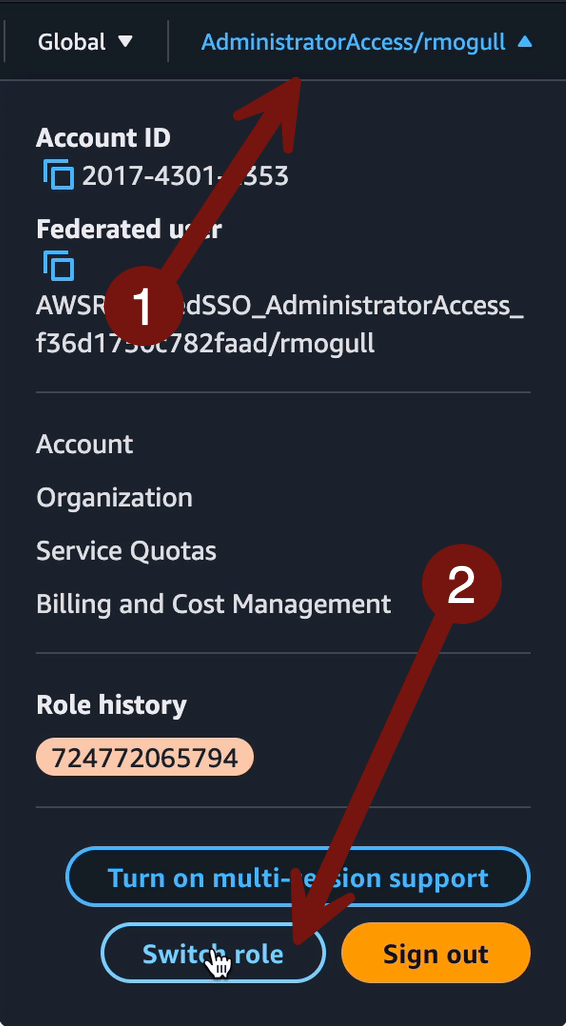

Start in your Sign-in portal > CloudSLAW > AdministratorAccess. We need to go back to our Production1 account and change a tag on our bucket to a sample event to work with. (If you still have one in your email you can use that, and skip this part). If you still have your account in your Role history then just click it. If not, here are the screenshots to switch roles (which is just assumerole from your AdministratorAccess role into the OrganizationAccountAccessRole in that account… yep, a simple role chain!).

That was just a reminder in case you didn’t have the account in your Role history.

Okay, let’s go into S3 and change a tag on our bucket. Since we’ve done this already I’ll just shortcut it, showing the main screenshots in order. I mean, you’ve been with me over a year at this point, so I have a lot of confidence in you. 😀

After you Save changes, go and close the tab. Then check your email for the event. Remember we left the EventBridge rule in place to email you events? It’s almost like I planned some things for once!!! Take that event, copy it, and paste it into a text editor (we won’t edit it, but we need a copy we can get easily). Also, only copy the JSON text! Don’t leave any spaces or anything:

Now let’s go into our Lambda function and update the code. Sign-in portal > SecurityOperations> AdministratorAccess > Lambda > security-auto-s3

Click Test, then name it S3Test, and paste in your test event. To make sure it’s a proper event click Format JSON — it’s easy to make mistakes like missing a character, and this helps catch those. Then Save.

We need to swap in our new code. Although I focused on role chaining in the Lesson section, here’s what it all does:

It pulls the account ID from the event, so it knows which account generated the event.

It pulls the bucket ARN from the event, so it knows which bucket changed.

In assumes the SecurityAutoremediation role in the identified target account.

It gathers some information about the S3 bucket, which we will use later to analyze the security posture.

Click Code. Here’s our new code. Copy this and paste it over the existing code > Deploy:

import json

import boto3

import re

import logging

from botocore.exceptions import ClientError

# Set up logging

logger = logging.getLogger()

logger.setLevel(logging.INFO)

def assume_role(account_id):

"""

Assume the SecurityAutoremediation role in the target account

"""

role_arn = f"arn:aws:iam::{account_id}:role/SecurityOperations/SecurityAutoremediation"

logger.info(f"Assuming role: {role_arn}")

sts_client = boto3.client('sts')

try:

response = sts_client.assume_role(

RoleArn=role_arn,

RoleSessionName="S3SecurityAnalysis"

)

credentials = response['Credentials']

return {

'aws_access_key_id': credentials['AccessKeyId'],

'aws_secret_access_key': credentials['SecretAccessKey'],

'aws_session_token': credentials['SessionToken']

}

except Exception as e:

logger.error(f"Failed to assume role {role_arn}: {str(e)}")

raise

def extract_bucket_name(event):

"""

Extract S3 bucket name from event by checking multiple possible locations

"""

# Check in resources array

if 'resources' in event['detail'] and event['detail']['resources']:

for resource in event['detail']['resources']:

if resource.get('type') == 'AWS::S3::Bucket' and resource.get('ARN'):

arn = resource.get('ARN')

bucket_match = re.search(r'arn:aws:s3:::([^/]+)', arn)

if bucket_match:

return bucket_match.group(1)

# Check in requestParameters

if 'requestParameters' in event['detail']:

req_params = event['detail']['requestParameters']

if req_params and 'bucketName' in req_params:

return req_params['bucketName']

# Check in responseElements

if 'responseElements' in event['detail'] and event['detail']['responseElements']:

resp_elements = event['detail']['responseElements']

# Various response element formats

if 'x-amz-bucket-region' in resp_elements:

bucket_match = re.search(r'arn:aws:s3:::([^/]+)',

str(resp_elements))

if bucket_match:

return bucket_match.group(1)

return None

def get_bucket_info(bucket_name, session):

"""

Get comprehensive information about an S3 bucket using the provided session

"""

s3_client = session.client('s3')

sts_client = session.client('sts')

# Get the account ID from the assumed role session

account_id = sts_client.get_caller_identity().get('Account')

result = {

'bucket_name': bucket_name,

'arn': f'arn:aws:s3:::{bucket_name}',

'account_id': account_id,

'tags': None,

'has_bucket_policy': False,

'bucket_policy': None,

'acls_enabled': True, # Default to True, will check if acl is "private"

'acls': None,

'block_public_access_bucket': None

}

# Get bucket tags

try:

tags_response = s3_client.get_bucket_tagging(Bucket=bucket_name)

result['tags'] = tags_response.get('TagSet', [])

except ClientError as e:

if e.response['Error']['Code'] == 'NoSuchTagSet':

result['tags'] = []

else:

logger.warning(f"Error getting bucket tags: {str(e)}")

# Get bucket policy

try:

policy_response = s3_client.get_bucket_policy(Bucket=bucket_name)

result['has_bucket_policy'] = True

result['bucket_policy'] = json.loads(policy_response.get('Policy', '{}'))

except ClientError as e:

if e.response['Error']['Code'] == 'NoSuchBucketPolicy':

result['has_bucket_policy'] = False

else:

logger.warning(f"Error getting bucket policy: {str(e)}")

# Get bucket ACLs and determine if ACLs are enabled

try:

acl_response = s3_client.get_bucket_acl(Bucket=bucket_name)

result['acls'] = acl_response.get('Grants', [])

# Check bucket ownership controls to determine if ACLs are enabled

try:

ownership = s3_client.get_bucket_ownership_controls(Bucket=bucket_name)

rules = ownership.get('OwnershipControls', {}).get('Rules', [])

for rule in rules:

if rule.get('ObjectOwnership') == 'BucketOwnerEnforced':

result['acls_enabled'] = False

break

except ClientError as e:

if e.response['Error']['Code'] != 'OwnershipControlsNotFoundError':

logger.warning(f"Error getting bucket ownership: {str(e)}")

except ClientError as e:

logger.warning(f"Error getting bucket ACL: {str(e)}")

# Get block public access settings for bucket

try:

bpa_response = s3_client.get_public_access_block(Bucket=bucket_name)

result['block_public_access_bucket'] = bpa_response.get('PublicAccessBlockConfiguration', {})

except ClientError as e:

if e.response['Error']['Code'] != 'NoSuchPublicAccessBlockConfiguration':

logger.warning(f"Error getting bucket public access block: {str(e)}")

return result

def lambda_handler(event, context):

"""

Main Lambda handler function

"""

try:

logger.info(f"Processing event: {json.dumps(event)}")

# Extract account ID from the event

if 'account' in event:

account_id = event['account']

else:

logger.error("Could not extract account ID from event")

return {

'statusCode': 400,

'body': json.dumps('Could not extract account ID from event')

}

logger.info(f"Extracted account ID: {account_id}")

# Assume role in the target account

try:

credentials = assume_role(account_id)

session = boto3.Session(**credentials)

except Exception as e:

logger.error(f"Failed to create cross-account session: {str(e)}")

return {

'statusCode': 500,

'body': json.dumps(f'Failed to create cross-account session: {str(e)}')

}

# For EventBridge events, format is slightly different

if 'detail' in event:

# Extract bucket name from CloudTrail event

bucket_name = extract_bucket_name(event)

else:

# Direct S3 event format

bucket_name = extract_bucket_name({'detail': event})

if not bucket_name:

logger.error("Could not extract bucket name from event")

return {

'statusCode': 400,

'body': json.dumps('Could not extract bucket name from event')

}

logger.info(f"Extracted bucket name: {bucket_name}")

# Get information about the bucket using the cross-account session

bucket_info = get_bucket_info(bucket_name, session)

logger.info(f"Results: {bucket_info}")

return {

'statusCode': 200,

'body': json.dumps(bucket_info, default=str)

}

except Exception as e:

logger.error(f"Error processing event: {str(e)}")

return {

'statusCode': 500,

'body': json.dumps(f'Error: {str(e)}')

}If you are a Pro subscriber, I’ll be holding a code review session after we finish these labs — and if you are reading this a year later, check out the recorded video in the library.

Alrighty, click Test.

And it should fail. 😦

Okay, it was a timeout. Easy enough to fix! Jump off the Code tab to Configuration > General Configuration > Edit > set the timeout to 30 seconds:

Here’s where things get a bit tricky. As a heads up, there is a lot of error-handling built into the code. So errors are managed gracefully and the function will run completely, but some errors will only show up in the logs, since they were handled. I deliberately left a common error in the code since — one you will hit a lot over the years. Click Test again, then scroll through the Output:

Here’s what happened. As far as the console is concerned, our function works because the internal error handling caught and handled the error. The problem is… we still have an error. In this case we are missing the permission (in the target account) to check the BucketOwnershipControls, which are important to know whether ACLs are enabled (we talked about that a long time ago).

To fix this, we need to change permissions in the account with that S3 bucket. How? Come on, you got this… StackSets! We just need to update our StackSet to add that permission! In a real enterprise this would be handled using a CI/CD pipeline, but it isn’t hard for us to make the change in the console. And one big difference: since people run through these labs at their own pace, and we need this error for this lab, we will use a new template instead of fixing the old one.

Personally, this is where I like to open up another tab, so I can keep the function open to run the test again. If you right-click the AWS icon in the upper-right corner, then Open link in new tab, then CloudFormation > StackSets > Service-managed > AutoremediationRole:

The first thing we need is the OU ID where the stack is deployed. Copy the OU ID and paste it into a text editor (since we also need to copy the URL to the updated template in a second):

Then Actions > Edit StackSet details:

Select Replace current template, then under Specify template paste in this URL: https://cloudslaw.s3-us-west-2.amazonaws.com/lab55.template, then click Next:

Click Next on the next 2 screens, then Paste in the OU and select All regions (or just US East (Virginia). Then Next > Submit:

It took me about 3 minutes for the template to update. As a reminder, this new template is exactly the same as the previous one, with just the new permission added.

Go back to your lambda function > Test > PROFIT!

No more error messages, and the results have all the information we need to make a security decision!

We are getting close to the end of this series, so the next few labs will be heavy on code. We still need to analyze the state of the buckets and, if needed, lock them down so they can only be accessed from our Organization. We also need to set up our time-based sweep to analyze all buckets, which is very tricky from a performance standpoint.

I appreciate you sticking with me on this extended series. By the end you should have all the tools you need to write your own enterprise-scale cloud security automation. This is absolutely an advanced skill!

-Rich

Reply